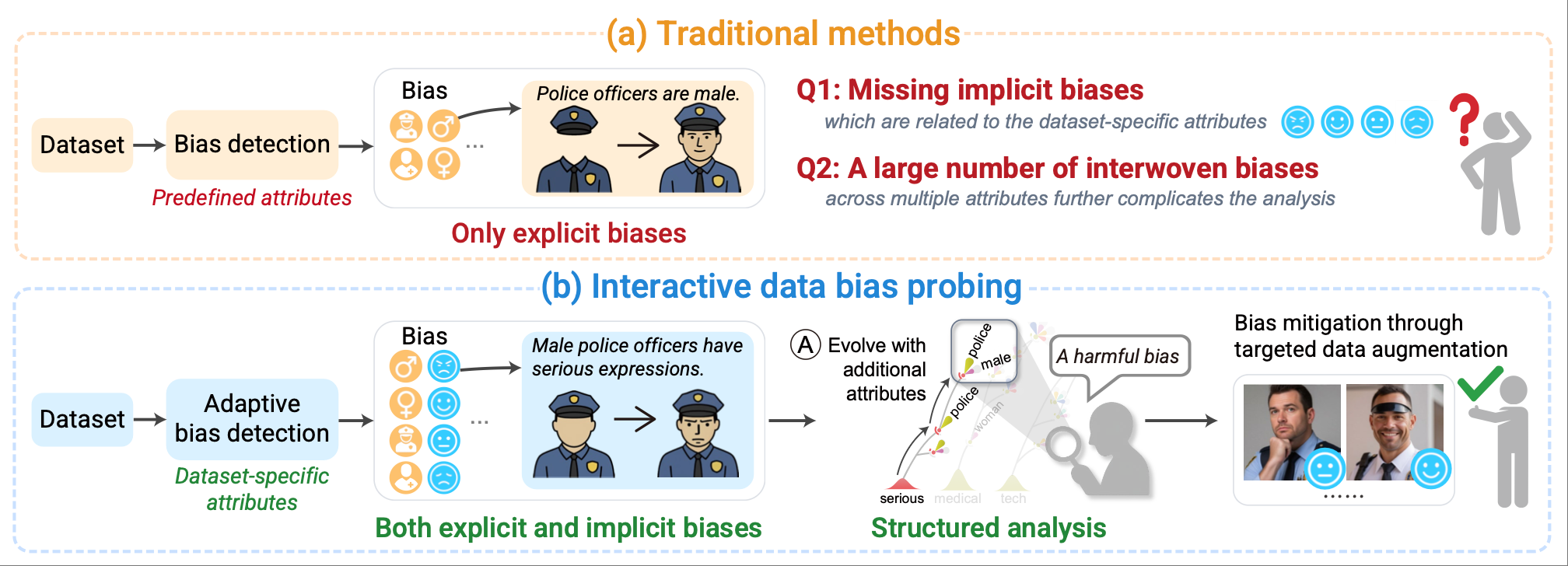

Bias in machine learning datasets occurs when certain attributes are unfairly associated, e.g., serious males being mostly linked with law enforcement officers in job-related image datasets. Training models on biased datasets will degrade model performance and lead to fairness issues, particularly for underrepresented groups. Existing bias detection methods mainly focus on explicit biases associated with predefined attributes (e.g., gender and ethnicity) while overlooking implicit biases associated with subtler, dataset-specific attributes (e.g., facial expressions and attire). To address this gap, we present BiasField, an interactive tool that offers a closed-loop workflow for detecting, analyzing, and mitigating bias. Central to BiasField is the adaptive detection of both explicit and implicit biases, a process facilitated by the automatic extraction of the dataset-specific attributes. It then employs a plant-growth metaphor to visualize these biases, enabling structured analysis to identify similar biases and track how they strengthen with additional attributes. Finally, confirmed biases are mitigated through targeted generative data augmentation. A user study, two case studies, and an expert study are conducted to demonstrate its capability to detect, analyze, and mitigate complex biases.

BiasField: Interactive Bias Probing of Machine Learning Datasets

Online Submission ID: 5462

Pacific VIS 2025

Abstract

Demo Video

Supplemental Material